AI Gateway & Model Management

Access OpenAI, Anthropic, and Ollama models from a single point with smart routing.

Heart of Technology

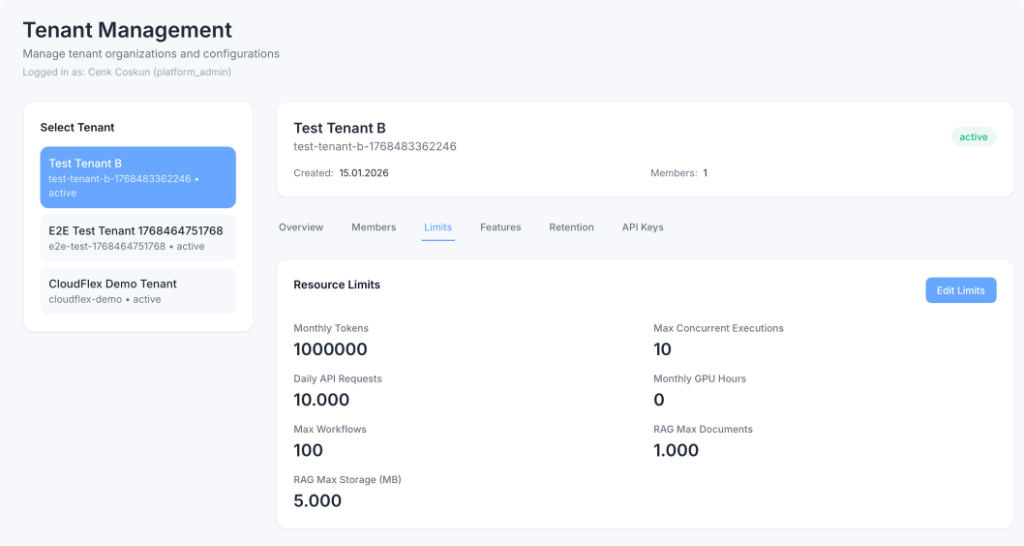

Designed to prevent model fragmentation and ensure cost control in enterprise architectures, AI Gateway manages all requests through a centralized system. It offers a seamless AI experience with automatic retry mechanisms, rate limiting, and provider-based quota management. Thanks to Cluster GPU support, you can serve your own open-source models with high performance via Ollama.

Use Cases

Centralized API Key Management

Quota-controlled access for all teams via a single key.

Cost-Effective Routing

Automatically routing simple tasks to cheaper models (Llama 3, GPT-3.5).

Multi-Provider Failover

Automatic fallback to Anthropic if a provider (e.g., OpenAI) goes down.

Local Model Private Cloud

Serving local models on Ollama internally for sensitive data.

Technical Details

- p95 Latency: <120ms

- Protocols: OpenAI SDK, Anthropic, REST

- Container Support: Kubernetes Pod Scaling

7/24 support is included for enterprise license holders.

Explore More

Manage all your AI processes integrated with the FlexAI ecosystem.